I read lot of articles about COBOL, IBM, mainframes etc. and I have noticed a certain pattern in these: The authors of most of these articles don’t know much about these topics (that’s natural when a topic has become that obscure) so they can only ask someone who knows these topics more – but when a topic is that obscure, you will hardly find people who don’t actively advertise their knowledge of the topic – i.e. they live on services regarding these topics, so you won’t get objective information. As a result, I see the same statements in many of these articles, which are very much verbatim copies of the marketing-claims of IBM, MicroFocus, CobolWorks, Cobol Cowboys, etc.

I want to provide some more objective information. For most of these topics I have written longer articles, where I go deeper into details and back them up with more data. Here, I just want to give an overview over the typical, major claims and briefly discuss, to what extent they are true, misleading or downright lies.

I want to emphasize that most of them have been true at some point in history, but COBOL advocates have never reassessed if the claims are still true (which most of them aren’t anymore). In particular, most numbers come from one survey in 1997 that was not representative and heavily biased.

Contents

Most claims are from 1997

Most claims about COBOL can be traced back to a „Gartner“ survey that was conducted in 1997. That survey was actually conducted by DataPro, but DataPro was bought by Gartner in 1997 – that is why it is attributed to „Gartner“.

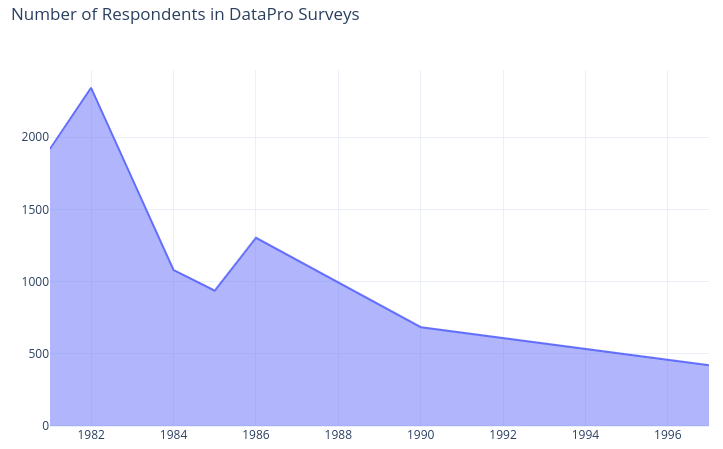

In the 70s and 80s, DataPro had conducted surveys on mainframe usage every year. The results were often discussed in Computerworld – many of these can be read on google books. But in the late 80s / early 90s, DataPro stopped conducting these surveys, when the number of participants had declined so much that the results wouldn’t have been meaningful anyways. The „Gartner“ survey in 1997 had only 421 participants. This set of samples is to small to be representative. Scaling its results up to the IT market of the entire world is unsound to say the least.

The decreasing number of respondents also implies an increasing survivorship-bias: Respondents who were less satisfied with their mainframes in one year are more likely to dispose their mainframes and not appear in the next survey. The more satisfied mainframe users „survive“ and then constitute a larger share of the decreased number of respondents. I have actually read from someone who counted the resulting „increase in user satisfaction“ as an indication in favor of mainframes.

These surveys were also exclusively about mainframe usage, so they had a strong selection-bias. Therefore, their results have never been applicable to the IT market outside of the mainframe-market in the first place. COBOL being predominant on about 50,000 mainframes (where it was preinstalled and nearly the only available programming language) doesn’t make it predominant on the billions of Windows, Mac and Unix computers (where it isn’t preinstalled and you have plenty of better and cheaper options).

Still – to this day, almost all articles cite the „Gartner“ survey – usually obscured by long chains of references to other articles. The chains of references are often further obscured by articles not being available anymore. I often had to use the wayback machine… So when you see a newspaper article in a museum that claims that COBOL is still relevant – that clearly proves that COBOL is still relevant today!

Many articles cite the exact numbers from the „Gartner“ survey. A few articles also scale the numbers up linearly to the release date of the articles. They would probably also predict that the average temperature in california will be 225.7°F in september 2021, because it was 76.2°F in september 1998 and 89.2°F in september 2000.

The 6 big numbers

Many articles tell you that COBOL was still extremely relevant and argue for this claim with the following 6 numbers:

- 50-60% of all the code in the world is written in COBOL (yes, they actually claim this!)

- There are 150 to 300 billion lines of COBOL code in active use

- 5 billion lines of COBOL code are newly written each year

- 95% of all „in person transactions“ or „ATM-swipes“ are handled by COBOL

- There are 200 times more COBOL transactions per day than Google searches.

- There are 20 to 100 billion COBOL transactions per day

These numbers sound impressive and the attribution to „Gartner“ makes them almost impeccable when you omit the fact that this survey was conducted in 1997, that it was heavily biased and that it had only 421 participants.

Let’s examine all these claims in detail:

„50-60 of all the code in the world is written in COBOL“

Do you have any idea how big the software industry is these days? 300 billion lines (assuming that number was correct, see below) are supposed to be 70% of that? So there are less than 300 billion lines of non-COBOL code in the world? This is absolutely laughable! Just for reference: 1.1 billion new computer virusses are written per year.

This number also comes from the „Gartner“ survey in 1997, which – again – was a survey of mainframe users only. So this number only says that 70-80% of all mainframe software is written in COBOL. That is plausible, given that there were hardly any other programming languages available for mainframes.

But I want to point out: the early sources are fully aware that they are falsifying this data: they deliberately hide the qualification „mainframe software“ by rebranding it as „business software“, „professional software“, „mission-critical software“ or similar.

According to the TIOBE Index, COBOL has a marketshare of about 0.5% today, so the maximum likelyhood is that 0.5% of all the code in the world is written in COBOL.

„There are 150 to 300 billion lines of COBOL code“ and „5 billion lines of COBOL code are newly written each year“

In 1995 Gartner projected that fixing the Y2K bug would cost $1 per line or $300 billion total. This is the only involvement of Gartner in these numbers! As early as 1996, people had already re-interpreted these numbers as „according to Gartner, there are 300 billion lines of code in all programming languages combined“. Gartner had also said that only 1 in 50 lines of code would have to be changed – so there might as well be 15 trillion lines of code in all programming languages combined, „according to Gartner“.

Now, since people believed that 50-60% of all the code in the world was written in COBOL (see above) and 50 and 60% of 300 billion lines are 150 and 180 billion lines respectively, this was re-interpreted to 150-180 billion lines of COBOL code. These numbers were then only handed down from article to article and occasionally increased with no further justification.

Let me repeat: COBOL had 50-60% market share – but only in the mainframe niche! These numbers don’t apply to the rest of the IT world, so this conclusion is complete nonsense!

„COBOL handles 95% of all creditcard-swipes“

This number, again, comes from the „Gartner“ survey. So this number, again, only says that 95%

1. of mainframe owners

2. who handle creditcard swipes

utilize their mainframes for the creditcard swipes.

Given the small number of 421 participants and the divisibility by 5, I suspect that we are talking about 20 banks – and 19 of them using their mainframes for processing creditcard swipes.

Today, there are only about 10,000 mainframes left in the world, but there are 25,000 banks in the world. So even assuming that all mainframes are owned by one bank each – and assuming that these banks handle 100% of their creditcard swipes with these mainframes, maximum likelyhood still says that COBOL handles no more than 40% of all creditcard-swipes.

„There are 20 to 100 billion COBOL transactions per day“

This number was possibly the hardest to fact-check. In my investigation on the subject I have found out that originally, 20 billion CICS (not COBOL) transactions per day were reported by IBM in a public telephone conference on September 9th, 1998 and that number was widely published by CNN 6 weeks later. Given the proximity between the Gartner survey and the telephone conference, I guess that the 20 billion CICS transactions per day originally also come from the „Gartner“ survey.

IBM proudly compared this to the number of web-hits, considering themselves bigger than Jesus the internet. When the number of web-hits had grown to 30 billion per day in 2000, they apparently didn’t want to stop considering themselves bigger than Jesus the internet and simply changed the 20 billion CICS transactions to 30 billion CICS transactions. They only justified this with the growth of the internet (which would only make sense if the internet had had zero growth outside of CICS-related hits!). This number was picked up in a blog post by Scott Ankrum on cobolreport.com which you will see as the source in countless articles on the subject.

Every article that reports a higher number of „COBOL“ transactions, has simply added 10 billion more transactions for roughly every two years between 2000 and the release of the article. So they claim that this imaginary growth between 1998 and 2000 has continued linearly for 20 years.

In my other article on the subject I have concluded that there were likely only between 90 million and 1.8 billion CICS transactions per day in 1998.

„There are still 200 times more COBOL Transactions than Google Searches“

As I have detailed in my blog article on the subject, this is just the 30 billion CICS transactions from above divided by the 150 million Google Searches per day in 2003. I don’t think that COBOLs made-up numbers from 2000 and Googles numbers from 2003 have any significance for COBOLs relevance today.

Also, CICS transactions and Google searches are used in very different circumstances, so it doesn’t make sense to compare the numbers in the first place.

The whole claim also doesn’t make sense from the perspective that there are only 10,000 mainframes left in the world, but google owns 2.5 million servers. Mainframes would need to have 50,000 times the performance of an x86 server for this to check out, but in reality, x86 servers dominate the list of the Top 500 fastest supercomputers in the world. That list hasn’t contained a single mainframe since 1999.

„There are 2 Million COBOL Programmers“

The most important question about COBOL these days is how many COBOL programmers are there left in the world. MicroFocus claims that it were 2 million, but that number comes from a study in the year 2000 – right after a boom in COBOL-Demand due to the Y2K bug!

About 5% of the COBOL developers go into retirement per year, so after 20 years, this makes 716,971 developers in 2020. There are initiatives to get new programmers into COBOL, but they can only recruit about 15,000 developers per year (although they try to obfuscate this by publishing the total numer of recruits of 12 years combined). Factoring this in, we arrive at roughly 1 million developers, not 2 million.

One million doesn’t sound to bad, compared to 4 million C++ Programmers for example, but the big difference is the age of the COBOL programmers: 72% of them will have retired by 2030 and 150,000 will be newly recruited. So in 2030, there will be only about 430,000 COBOL developers left. Is that enough to sustain a COBOL ecosystem of 300 billion lines of code? I don’t think so.

Because of that, some COBOL programmers even come out of retirement for royal salaries (like the Cobol Cowboys). There are even rumors of recruiters headhunting at retirement homes.

„COBOL is easy to learn / easy to read“

COBOL advocates always emphasize how „easy“ COBOL was to learn. How self-explanatory the codes were. I strongly disagree for many reasons.

You can write simple programs in any language with only a few syntactic structures: input & output, variables, arithmetic, branching (if-then-else) and loops. You can learn any high-level programming language to that extent in one day. But that doesn’t enable you to maintain someone elses code, who has used tons of verbs and modifiers you don’t know. I have years of experience in COBOL and every time I look at a new COBOL code, I’m completely lost, because I’m never sure if I’m looking at a variable or a verb/modifier I don’t know.

Also, most COBOL source codes aren’t documented and even if you do have a documentation – you will hardly understand it, because nearly everything is named differently in the COBOL-world. You will just not know what they meant by the words you read. For example, would you understand a comment that tells you that the code „catalogs a dataset on a DASD“?

Also, COBOL codes tend to have a very twisted logic: jumping around, passing data via global variables (spaghetti code), unscoped variables, tight coupling. You can hardly touch any line of code without breaking something seemingly unrelated.

I believe that this is the main reason why COBOL is the 2nd most hated programming language in the world (which I don’t think would be the case if COBOL was actually „easy“).

For more details, see my blog article on the topic.

„COBOL Programs are faster“

This claim might be the one you see the most and it has certainly been true in the past – but this had been due to mainframe hardware being vastly superior at the time. Multiple processors, arithmetic co-processors, dedicated I/O controllers – every computer has these today. The list of Top 500 fastest supercomputers in the world hasn’t contained a single mainframe since 1999.

Comparing programming languages by the power of the hardware that the compiled programs run on, is complete nonsense anyways.

Comparing the performance of COBOL programs and programs written in other languages is hard, because they are tailored towards computers with different architectures.

But there are many problems in the basic design of COBOL and the programs written in it, which have negative effects on the running times. Early versions of COBOL didn’t support more than 64 KB of RAM for example, so COBOL programs often do their work by moving data back-and-forth between dozens of auxiliary files on the hard-disk, to save RAM. Depending on the speed of the hard-disk, this makes these programs 20 to 20,000 times slower than corresponding programs that process their data in-memory, which you can do without any problems on modern computers that have dozens of GB of RAM.

Also, COBOL doesn’t (officially) support pointers, so many COBOL programs keep copying data back and forth in the memory – wasting a lot of time.

Another reason why COBOL used to be faster is VSAM – this is a technology that lets you map your table-structures directly to disk-locations. This made data quickly accessible, because you could directly calculate a tablerows physical location without consulting an index (which was a time-consuming operation on tape-drives and disk-based drives).

With Solid-State Drives, consulting an index is fast. VSAM gives you no advantage anymore. To the contrary: the additional work for maintaining VSAM is a lose-lose situation today.

A similar claim you might see is that COBOL could handle „thousands“, „millions“ or even „billions of transactions per second“. Again – this would be the speed of the hardware, not COBOLs accomplishment. But the problems in New Jersey have shown that the average mainframe can’t even handle a million transactions per month. For more details, see my blog article on the topic.

Also you have to consider that the majority of mainframes is over 17 years old and has less processing-power than a $35 Raspberry Pi 2. (no exaggeration!)

„COBOL Programs are more secure“

This claim comes from a time when mainframes were only used inside a closed network. Of course programs are rather „secure“ when you have total control over who has any access to them.

But in 2017, a study has shown that COBOL programs are actually 5 times more vulnerable to cyber attacks than programs written in other languages. COBOL programs are (for example) particularly vulnerable to SQL Injection attacks – and such vulnerabilities aren’t so easy to fix.

This makes it particularly dangerous to migrate COBOL programs into the cloud, where the world is only one (probably much to weak) password or one vulnerability in the VPN software away from having access to them.

For more details, see my blog article on the topic.

„COBOL computations are more precise“

This might be the most impudent lie you will find over and over in these articles. COBOL is turing complete, just like C, C++, C#, Java, Python, and almost every programming language there is. Therefore it is mathematically impossible for COBOL to be „more precise“ than other languages.

COBOLs internal representation of rational numbers is different from most modern languages. Every representation (including the one COBOL uses) has problems with numbers that are repeating in their respective numeral system.

COBOL advocates have constructed examples in which COBOLs representation has a small advantage and ignore the fact that there are also examples in which COBOLs representation has a huge disadvantage (multiples of 1/3 for example). This is how they came up with this preposterous claim.

If anything, COBOL computations are less precise than IEEE 754 Floating Point calculations, because in the cases where COBOL has an advantage, it has an advantage of maybe 0,000000000000000001%, whereas in cases where COBOL has a disadvantage, it has a disadvantage of easily 10%.

For more details, see my blog article on this topic.

„COBOL is cross platform compatible“

In 1959, this was a reasonable statement, compared to the alternatives. Back then, programmers were used to hand-writing machine-code that would work on one microchip and one microchip only. Even Assembly was a luxury back then. Compared to that, any high-level programming language would have been considered „cross platform compatible“. A semantic statement like „DISPLAY X.“ that worked on all mainframes alike? That was unheard of!

But CODASYL has only defined COBOLs syntax, not the formal semantics. Because of that, there are over 300, mutually incompatible dialects of COBOL, which are all „correct“. A program from one mainframe can and will do different things on different mainframes and even on different versions of the same mainframe. See here for some examples.

Also, most manufacturers provide proprietary extensions – this also breaks the cross platform compatibility.

So by todays standards, COBOL is nowhere near „cross platform compatible“. By todays standards, there has to be one consortium (mostly the ISO) that defines the one and only „correct“ interpretation of a source code.

„COBOL is modern“[sic]

When you think of COBOL, you think of IBM 3270 Terminals and their green on black text-mode, Tape-Reels and dot-matrix printers.

There are COBOL Compilers for Windows and Linux that can translate to JVM and CLR. Some companies even offer plugins for Eclipse and Visual Studio, so you can use a nice IDE and state-of-the-art version control systems like git.

In 2002, the COBOL standard even included object oriented programming (OOP). But that part was made optional in 2014, because almost no compiler had implemented that.

Running on modern systems or having OOP doesn’t magically make a language „modern“ though. An old car doesn’t become a new car by driving on a new street either. Just look at the example at codinghorror.com! Does that look „modern“ compared to the C# example there?

Also, Object Orientation is not a fixed thing – it evolves. To this day, COBOL doesn’t support exceptions, generics, coroutines or lambdas. What COBOL calls „Object Orientation“ is an early 1990s version of „Object Orientation“.

So – no – COBOL is not modern. COBOL is 30 years behind „modern“ and it drops behind more and more every day!